or, of assumptions, biases and filters

For those who still don't know what DALL·E 2 is, it's a new AI system from OpenAI that can create art and realistic images from a text prompt given in natural language. Pretty impressive, I know! Since their filtering approach is still flawed, their main component of the mitigation strategy so far has been limiting system access to only trusted users, with whom the OpenAI team directly reinforces the importance of following the use case guidelines. After a month of being on the whitelist, I was invited to have early access, and you can imagine my excitement and joy to finally have my hands on it.

I've been experimenting with the system for a week now and while it was super insightful, there were still some problems with filtering that I wish were taken into account. Since we've been asked to use it according to the content policy and report any suspected violations of the rules directly to the team, we aren't allowed to generate anything other than G-rated or potentially harmful content.

Since I have only limited knowledge of how the system and filtering work, I asked my brother-in-law Tornike, who studies AI, to give me some tips on how to "talk" with the system to generate better results and find its weaknesses. Here are some of what we've noticed so far:

1. When it comes to generating a photo in a specific art style, you will get a better result if you modify your prompt similarly to the official title of the original piece.

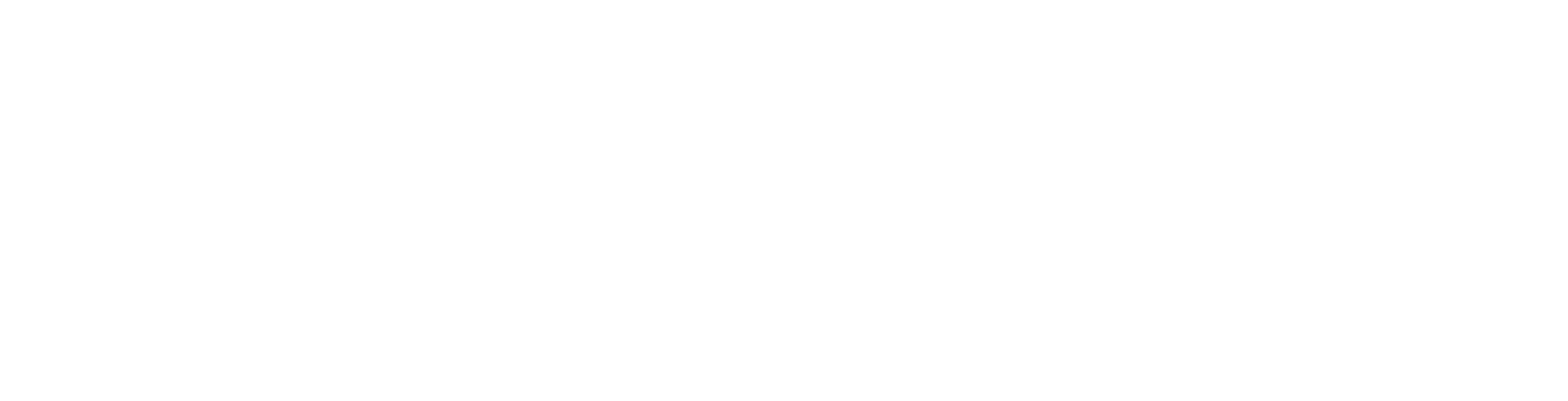

Left: Naruto And Gaara feeding ducks, in Niko Pirosmani style

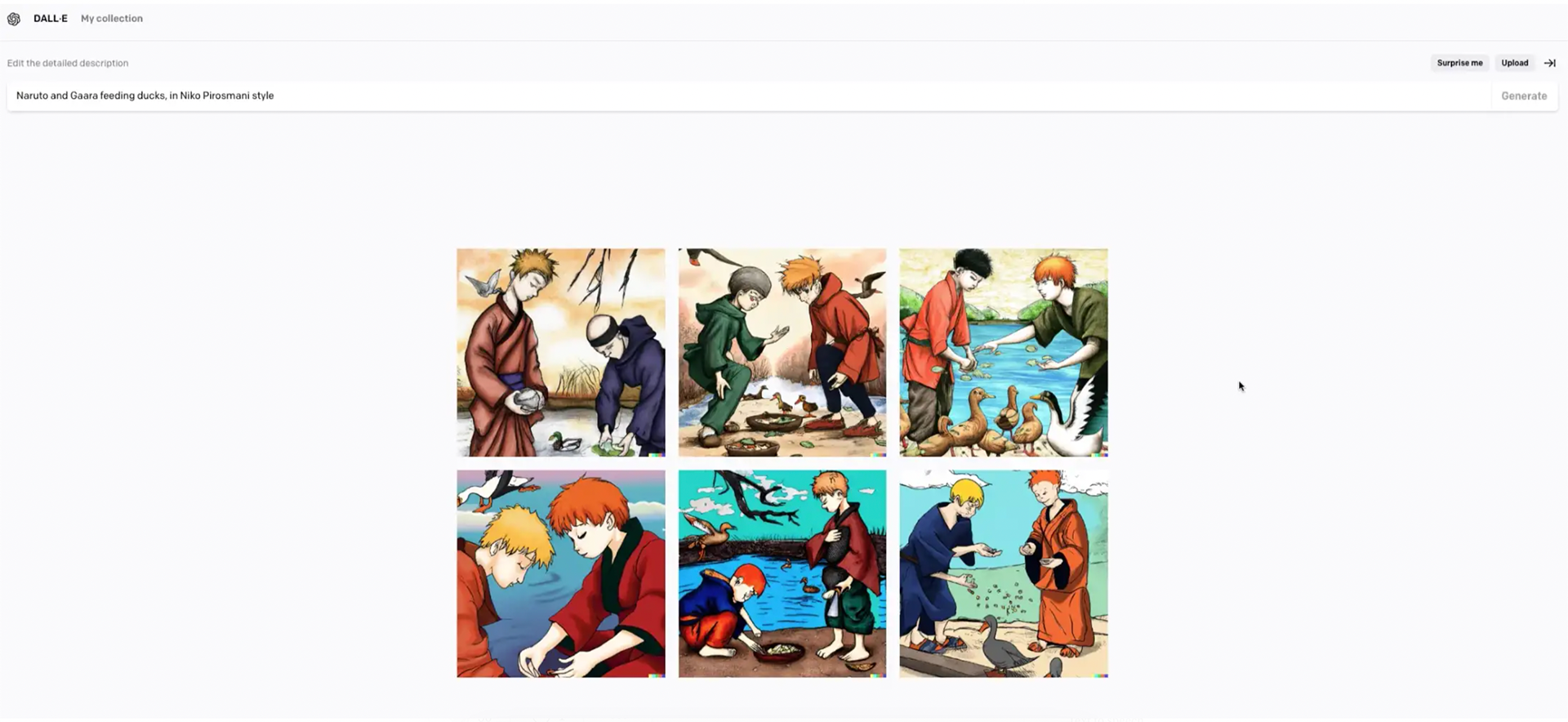

Right: Fisherman Naruto in a red shirt, Niko Pirosmani. Original title: მეთევზე, 1908, primitivism, portrait, oil cloth, Tbilisi, Georgia.

Right: Fisherman Naruto in a red shirt, Niko Pirosmani. Original title: მეთევზე, 1908, primitivism, portrait, oil cloth, Tbilisi, Georgia.

The second prompt above made it easier for the system to generate what I initially had in my mind.

2. Default and assumption.

On their Github, we read "The default behavior of the DALL·E 2 Preview produces images that tend to overrepresent people who are White-passing and Western concepts generally."[1]In the prompt below, the system generated all males, all of whom were of Asian origin.

3. If you’re a fan of Pissarro’s work, I have bad news for you.

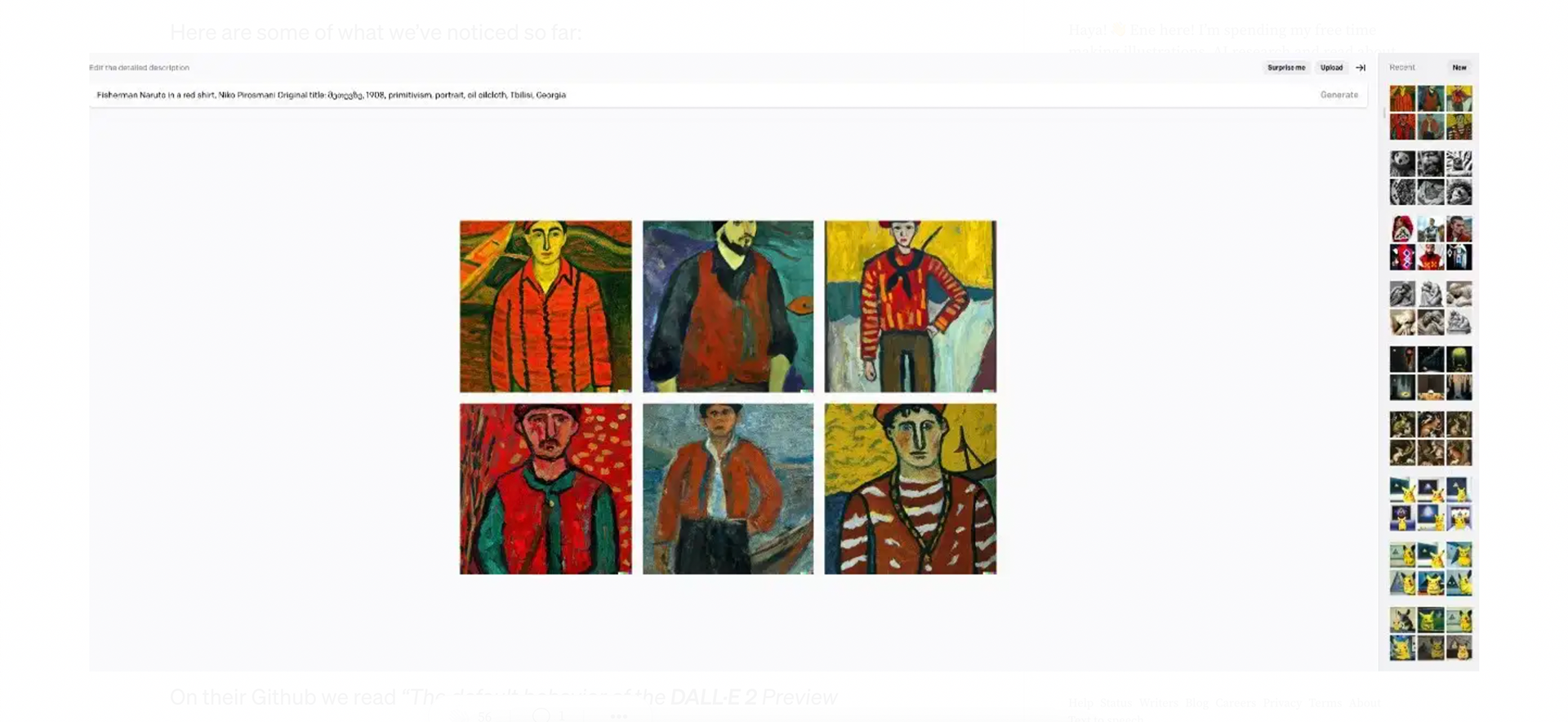

So I’ve been in love with Pissarro’s work forever and imagine my disappointment when I got this message:

Obviously, I went back to the content policy to try and find what I did wrong since there was nothing violating in my initial prompt at first glance. In the content policy, we read:

"Do not attempt to create, upload, or share images that are not G-rated or that could cause harm. Shocking: bodily fluids, obscene gestures, or other profane subjects that may shock or disgust." [2]

When I asked Tornike if the combination of letters in the painter's surname (Piss) could be the reason for the error, he mentioned something about pre-filtering the database. So, I went back and checked Github to see if they addressed this issue about explicit content and how I can avoid policy violations while still generating my initial request.

“Despite the pre-training filtering, DALL·E 2 maintains the ability to generate content that features or suggests any of the following: nudity/sexual content, hate, or violence/harm. We refer to these categories of content using the shorthand “explicit” in this document, in the interest of brevity. Whether something is explicit depends on context. Different individuals and groups hold different views on what constitutes, for example, hate speech (Kocoń et al., 2021).”[3]

Left: A painting of Moby Dick whale in the pink ocean, yellow background, sun setting, impressionism, Right: A painting of a big blue whale in the pink ocean, yellow background, sun setting, impressionism

In order to generate what I wanted initially, I had to follow the manual that suggested using "visual synonyms" (referring to prompts for things that are visually similar to objects or concepts that are filtered, e.g. ketchup for blood). While the pre-training filters do appear to have stunted the system's ability to generate explicitly harmful content in response to requests for that content, it is still possible to describe the desired content visually and get similar results. To effectively mitigate these, we would need to train prompt classifiers conditioned on the content they lead to as well as explicit language included in the prompt.[4]).

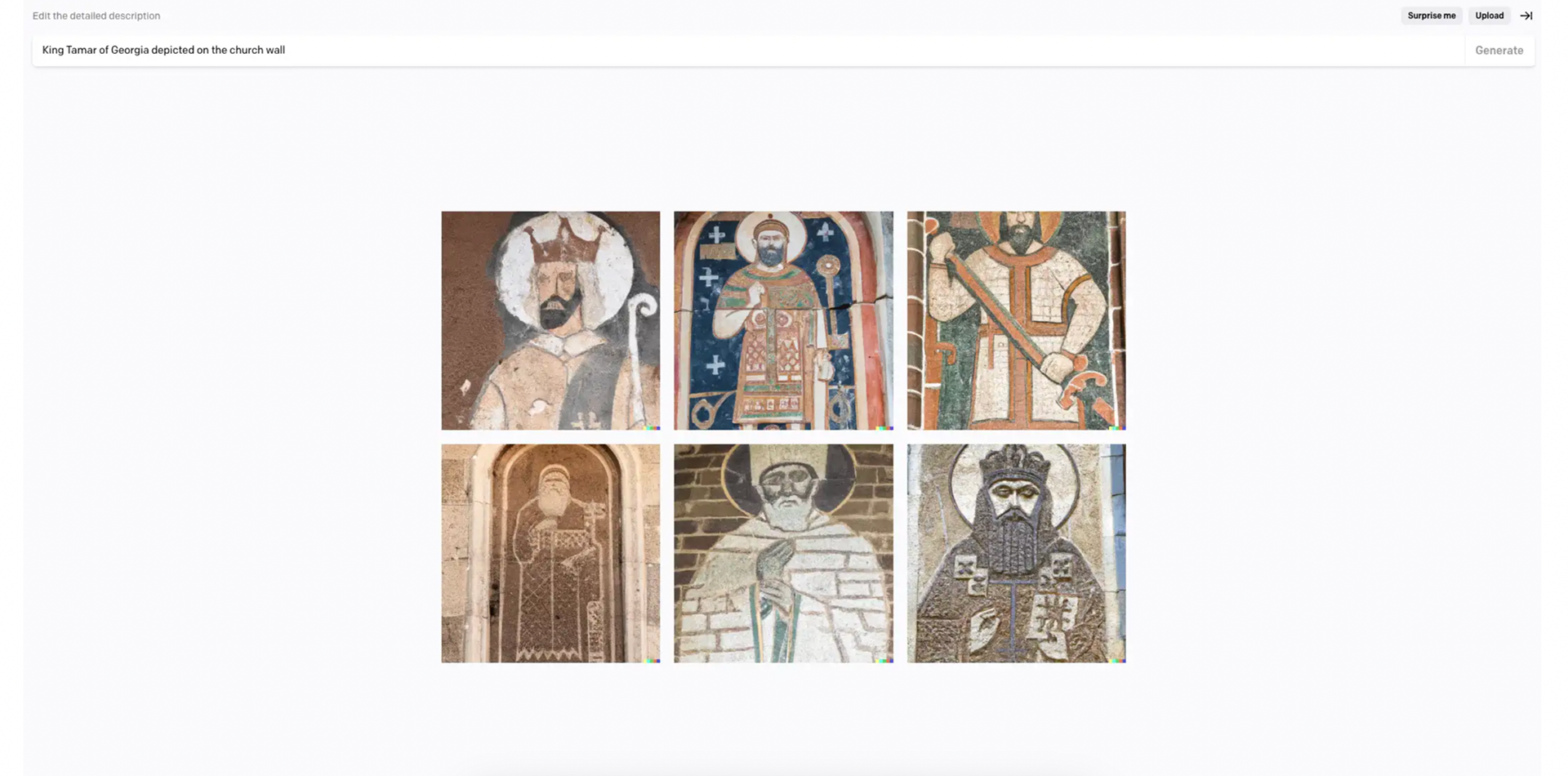

As a notable mention, I also tried to request prompts including traditional Georgian themes. I wasn't surprised that some of the visuals didn't match my text prompts, as if the system didn't "know" what I was asking for or what I meant and gave me very vague, general visuals.

The right prompt above was a bit tricky. "King" triggered the assumption that I was looking for a male, and while it caught the overall idea about Christianity and church and Georgian outfit (triggers: Georgia, church), it was still flawed.

I'm not sure how they will address these issues, but so far experimenting with DALL·E 2 has been the main highlight of the past few weeks. I hope they will invite more artists to try and test the system.